|

ALIENSHIFT talks about Extraterrestrials and their cultures.

"V" also have

been called a New Hope for Humanity Pioneers in Alientology study of UFO, Earth Changes, extraterrestrails, Moon

Base, Mars colony, Deep Underground Military Bases, super soldiers, GMO, Illuminati 4th dimensional workings, Planet

Nibiru, Global warming, Pole Shift of 2012, Life on MARS, U.S bases on Moon and MARS, Alternative 3, Time travel, Telepathy,

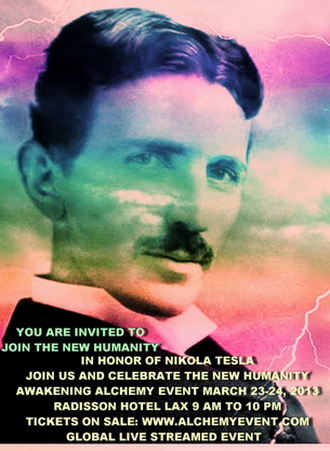

Teleportation, Metaphysics, The Rainbow Project, Project Invisibility, Phoenix Project, Teleportation Projects, Nicola Tesla.

John Von Neumann, USS Eldrige, The Montauk Chair, Dr, Wolf, Alternate Reality, Warping Space Time, HARRP Project and Weather

Control, Alternate Time Lines, ET Taught Military the Laser & Stealth Technology. Tesla Towers, Hollow Earth, Teleportation

to the Planet MARS and NIBIRU, Time travel back to Atlantis, how to build a Teleportation Machine, Under ground Extraterrestrial

Bases, Tesla Arranges ET's meeting, Pleiadians, Gray Reptilians, Arcturians, Tall Whites, Ancient Civilizations, Time

Tunneling, Grey Alien projects, Atlantis, Secret Society's, German Mars Projects, Nazi UFO, Nazi Gold and Fort Knox, Albert

Einstein, Nicola Tesla, Anti Christ using A.I and Micro chip Implants, Worm Holes, Space Time, Moon, Moon Landing, Rings of

Saturn, Life on Venus, Time Travel Machine Build by GE next 20 years, Weather Control, Psychic Frequency. Time Vortex, Face

of Mars, Ancient Civilizations, Mars Ruins, Telepathic Thought and Powers of the Mind, Artificial Intelligence, Et message

of Islam, Islamic Jihadist Revival and Islamophobia created by Western Alliance to offset the Chinese and Soviet Balance of

Power by creating the Al-Qaeda in Afghanistan during 80s. Montauk Base, Zero Time frame Reference, Akashic location system,

Ancient Religious artifacts in Iraq and MARS, Alien walk in's, Jesus, Sci Fi UFO video in New York, Philadelphia Experiment.

The subconscious mind, Video of FOX 11 news on Chicago OHRE UFO Sighting. Numerology and effect of numbers: 0, 1, 11,

1111, 1112, 1119, 1911, 2111, 511, 711, 811, 911, 1212, 555, 786, 19, 110, 2012, 999, 888, 777. Current Cycle of Man ends

as MAYAN CALENDAR ends on Sunday 12/ 21/ 2012. Watch your back on that Day! Bob Lazar, John Lear, Mars Face, Mars Pyramid,

Why we are not allowed to have Dept of UFOLOGY and ET studies at University of Yale, Princeton , UCLA, USC, CAL TECH, MIT

or Harvard? American Torture in Abu Gulag and on the Air Secret planes new way of American Democracy! Sufism the Alchemy of Happiness, evolution, Dan Burisch and other famous scientists video of J-ROD at AREA

51, S4 level and New Mexico DULCE Alien Facility, Why Mosad killed JFK to cover Israel Nuclear Facility inspection, Islamic

Prince was killed in Princess Diana womb to avert the future time line, Dalai LAMA never let go of Tibet and fight with China,

There is no Oil shortages and global warming, Earth warming is due to arrival of Planet Nibiru or Planet of Crossing which

is heating up the whole solar system, Saturn, Mars, Uranus, Mayan Calendar year 2012 your time line is crossing get

ready prepare for Contact, Solar System causing global warming Nibiru approaching, GOOGLE, You Tube UFO Videos, ALIEN WEBBOT,

MAYAN CALENDAR ENDS 2012, Big Foot, Milky way and the Big Bang, real UFO, UFO photos, Information page on ET Civilizations,

ALIEN and UFO videos, story of Real Aliens, picture of Aliens, Magnetic Pole Shift, ET CO-Operation in Iran and IRAQ, crystals,

NIBIRU, 2012, METEORITE, IMPACT, EARTH, GIANT, NEPHILIM, ALIEN,

ALIENS.Vote OBAMA, SAEED DAVID FARMAN

Bermuda triangle, Chupacabra, ISLAMIC UFOLOGY, MAJESTIC 12, SHADOW

GOV, Rabbit Foot and Cracking the CODE, CROP CIRCLES, CLONING, MASNAVI OF RUMI with Rumi You tube Videos, METATRON, DRUNVALO

MELCHIDEZEK, MESSAGE OF KRYON, Master Kuthumi and Ker-On. US SENATORS, MESSAGE of Commander Hatonn, ST. Germain on ASCENSION

and AG-Agria, John Lear ,WILLIAM COOPER VIDEOS , ALEX COLLIER VIDEOS . Bush Skull and Bones family connection to Illuminati

CFR New World order.Mysoace in Paris Hilton Hotels within Google, Yahoo, MSN, EBAY, EBAY, MAPQUEST, TIGERDIRECT,COM, NEWEGG,COM,

BUY.COM, Some playing Golf on YouTube.com, with Disney Pokeman play Gamealso free music downloads in CNN.COM, ABC.COM, CBS.COM,

NASA.COM, etc. Allah is Great Long Live freedom and ET message of Love and Islam. Master of all Masters Ibn Arabi, Persian

music the Alchemy of Soul, SUFI MASTERS, IMAM MAHDI, Preparation prayer for Pole Shift 2012 in Mecca, Nostradamus, Oil, Flying

Saucers Antimatter, Gravity waves DISCS at S4 Groom Lake or Papoose Lake nick named AREA 51 in Nevada.

|